Promises, Fallacies & Fantasies of AI

Co-Authored and Reviewed by Gagan Sandhu, MBA - The University of Chicago Booth School of Business, CEO of Xillion

Posted on . 3 min read

After spending 15 years working in the data & AI space, I have been both mesmerized by its potential and humbled by its limitations.

The latest generation of generative AI tools such as ChatGPT are no different. These tools wow you instantly, but they can’t be trusted 100%, especially in areas where deep expertise is needed. And especially when your reputation or someone’s life is on the line!

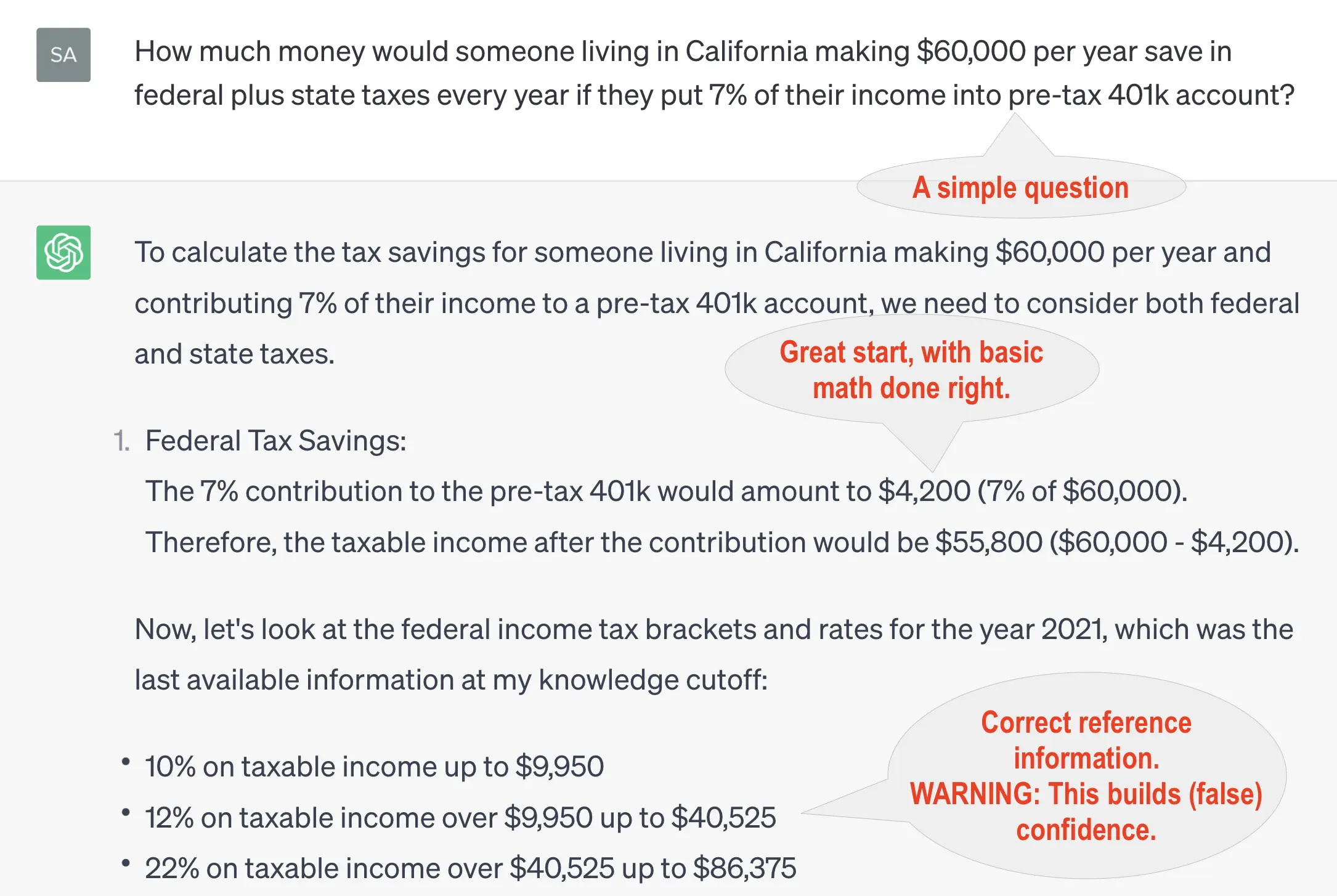

Let me walk through an example. I was recently chatting with a Xillion customer about 401k deductions & its tax benefits. I needed a quick calculation on their expected tax savings from making 401k contributions pre-tax instead of post-tax.

I asked ChatGPT expecting a quick answer. It started on a promising note, giving details of federal tax brackets and the marginal tax rates. It also did the first part of the calculation right. Everything looked promising at first glance.

But when it gave the final answer, it was completely wrong. It wasn’t even close. The result was 5x the approximate answer that I had roughly calculated in my head. I shared my own number with the customer and resolved to come back to research the miscalculation.

A bit of digging revealed that ChatGPT hallucinated and made up an imaginary number to calculate the marginal tax (see pic 2). It took me around 5 minutes to find the exact place where ChatGPT went wrong. I don’t know why it made up the number, and I don’t think even the creators of this algorithm would be able to pinpoint exactly why this happened. That one hallucination changed the equation completely and rendered the entire effort useless.

Here’s my overall take on generative AI tools like ChatGPT: they’re great at regurgitating & repeating the information that has been available in public domain (the Internet) in large quantities for a long time. But they can’t do any real thinking, despite all the claims. I have spent a big chunk of my adult life building data & AI products, and I have seen that the promise of new technology generally exceeds its utility — sometimes by a wide margin.

What does all this mean for you? If you are a knowledge worker and you solve unique problems, focus on the aspects of your job that are unique to your job, company, industry, etc. For the things that are widely available — say, common programming patterns, creating documentation, etc. — use AI tools because those algorithms have been trained on millions of design documents, software programs, reports and such.

I use ChatGPT to augment my research, instead of using a search engine, because it provides well-organized information that is easy for me to consume. But when I’m working on something that puts my reputation on the line, I do not rely on AI output. Perhaps this is why students are some of the biggest users of Generative AI tools? Barring accusations of plagiarism, students face limited consequences for generative AI providing inaccurate information.

These accuracy pitfalls and domain limitations are exactly why I don’t expect AI tools to replace any meaningful part of knowledge jobs for the foreseeable future. While OpenAI and the AI industry are hard at work on making these products more accurate, these limitations are simply inherent to the technology. After all, generative AI is a word predictor: it uses the words before it to predict which word is most likely to come next. That isn’t thinking. At least not in the way we humans do it.

I would love to hear your thoughts. What do you think of generative AI? How big of a limitation is the accuracy problem? How do you use AI in your work?